Uncertainty-guided learning with scaled prediction errors in the basal ganglia.

When feedback is less reliable, it should influence learning to a smaller extent. This paper proposes how this principle is achieved in nerve cell circuits responsible for reinforcement learning in a brain region known as the basal ganglia. The paper describes a computational model where the activity of nerve cells releasing the chemical messenger dopamine is scaled by the uncertainty, which explains the observed patterns of activity of these cells.

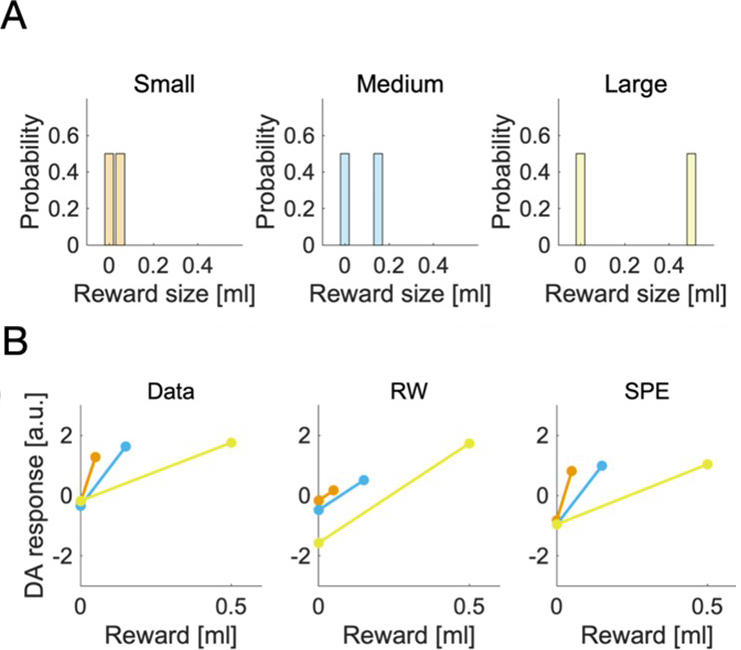

To accurately predict rewards associated with states or actions, the variability of observations has to be taken into account. In particular, when the observations are noisy, the individual rewards should have less influence on tracking of average reward, and the estimate of the mean reward should be updated to a smaller extent after each observation. However, it is not known how the magnitude of the observation noise might be tracked and used to control prediction updates in the brain reward system. Here, we introduce a new model that uses simple, tractable learning rules that track the mean and standard deviation of reward, and leverages prediction errors scaled by uncertainty as the central feedback signal. We show that the new model has an advantage over conventional reinforcement learning models in a value tracking task, and approaches a theoretic limit of performance provided by the Kalman filter. Further, we propose a possible biological implementation of the model in the basal ganglia circuit. In the proposed network, dopaminergic neurons encode reward prediction errors scaled by standard deviation of rewards. We show that such scaling may arise if the striatal neurons learn the standard deviation of rewards and modulate the activity of dopaminergic neurons. The model is consistent with experimental findings concerning dopamine prediction error scaling relative to reward magnitude, and with many features of striatal plasticity. Our results span across the levels of implementation, algorithm, and computation, and might have important implications for understanding the dopaminergic prediction error signal and its relation to adaptive and effective learning.

2022. PLoS Comput Biol, 18(5)e1009816.

2015.PLoS Comput. Biol., 11(12):e1004609.