Theories of Error Back-Propagation in the Brain.

This paper summarises recent ideas on how networks of nerve cells in the brain could learn according to an efficient method called “error back-propagation”. This method is widely used to teach artificial neural networks, but for the last 30 years it was thought to be too complex for the brain to use. The theories reviewed here challenge the accepted dogma, showing how it is possible for the brain to achieve such effective learning.

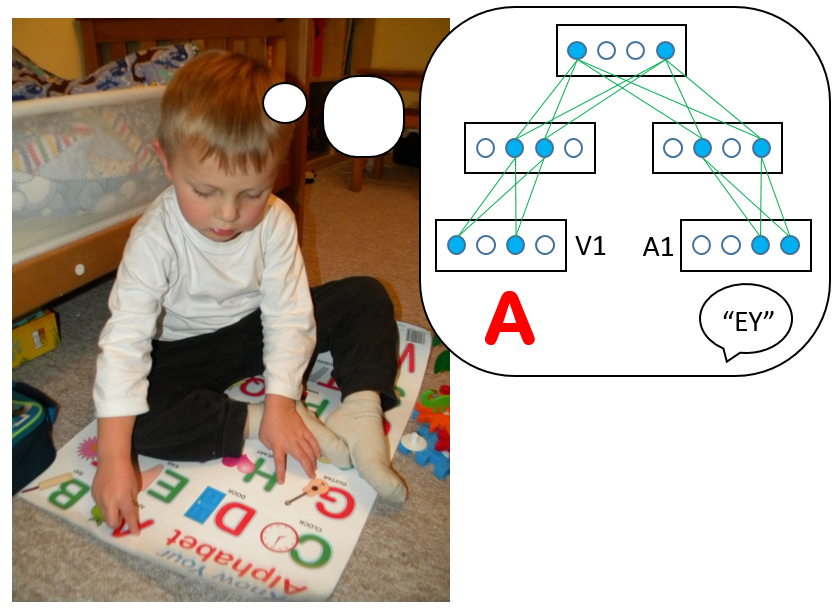

This review article summarises recently proposed theories on how neural circuits in the brain could approximate the error back-propagation algorithm used by artificial neural networks. Computational models implementing these theories achieve learning as efficient as artificial neural networks, but they use simple synaptic plasticity rules based on activity of presynaptic and postsynaptic neurons. The models have similarities, such as including both feedforward and feedback connections, allowing information about error to propagate throughout the network. Furthermore, they incorporate experimental evidence on neural connectivity, responses, and plasticity. These models provide insights on how brain networks might be organised such that modification of synaptic weights on multiple levels of cortical hierarchy leads to improved performance on tasks.