Can the Brain Do Backpropagation? -Exact Implementation of Backpropagation in Predictive Coding Networks.

This paper proposes how networks of nerve cells in the brain could learn according to an efficient method called “error back-propagation”. This method is widely used to teach artificial neural networks, but it is not clear if the brain could use it. Previous studies only described how this method could be approximated by networks of nerve cells, and this paper shows for the first time how it could be put to use exactly.

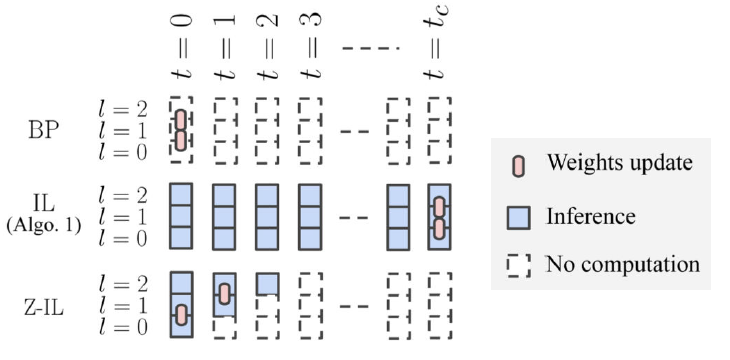

Backpropagation (BP) has been the most successful algorithm used to train artificial neural networks. However, there are several gaps between BP and learning in biologically plausible neuronal networks of the brain (learning in the brain, or simply BL, for short), in particular, (1) it has been unclear to date, if BP can be implemented exactly via BL, (2) there is a lack of local plasticity in BP, i.e., weight updates require information that is not locally available, while BL utilizes only locally available information, and (3) there is a lack of autonomy in BP, i.e., some external control over the neural network is required (e.g., switching between prediction and learning stages requires changes to dynamics and synaptic plasticity rules), while BL works fully autonomously. Bridging such gaps, i.e., understanding how BP can be approximated by BL, has been of major interest in both neuroscience and machine learning. Despite tremendous efforts, however, no previous model has bridged the gaps at a degree of demonstrating an equivalence to BP, instead, only approximations to BP have been shown. Here, we present for the first time a framework within BL that bridges the above crucial gaps. We propose a BL model that (1) produces updates of the neural weights as BP, while (2) employing local plasticity, i.e., all neurons perform only local computations, done simultaneously. We then modify it to an alternative BL model that (3) also works fully autonomously. Overall, our work provides important evidence for the debate on the long-disputed question whether the brain can perform BP.