Inferring neural activity before plasticity as a foundation for learning beyond backpropagation.

This paper proposes that the brain learns in a fundamentally different way than current artificial intelligence systems. It demonstrates that this biological learning mechanism enables faster and more effective learning in many tasks faced by animals and humans in nature.

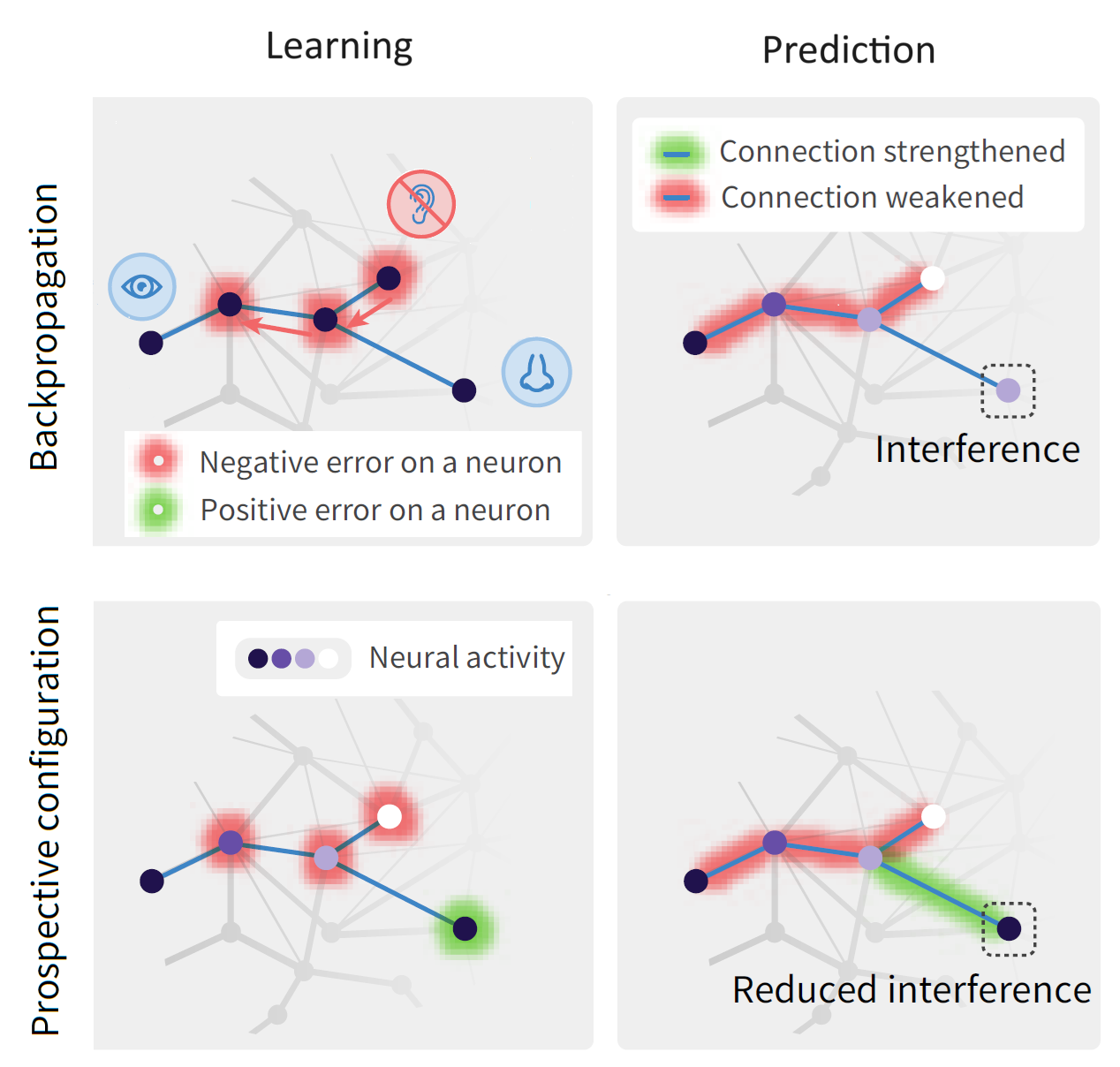

For both humans and machines, the essence of learning is to pinpoint which components in its information processing pipeline are responsible for an error in its output, a challenge that is known as 'credit assignment'. It has long been assumed that credit assignment is best solved by backpropagation, which is also the foundation of modern machine learning. Here, we set out a fundamentally different principle on credit assignment called 'prospective configuration'. In prospective configuration, the network first infers the pattern of neural activity that should result from learning, and then the synaptic weights are modified to consolidate the change in neural activity. We demonstrate that this distinct mechanism, in contrast to backpropagation, (1) underlies learning in a well-established family of models of cortical circuits, (2) enables learning that is more efficient and effective in many contexts faced by biological organisms and (3) reproduces surprising patterns of neural activity and behavior observed in diverse human and rat learning experiments.

2024. Nat Neurosci, 27(2):348-358.

2024. PLoS Comput Biol, 20(11)e1012580.